I also saw similar errors when running airflow db upgrade.Īfter a check on the ab_view_menu database table I noticed that a sequence exists for its primary key ( ab_view_menu_id_seq), but it was not linked to the column. Which seems to be the cause of the problem: a null value for the id column, which prevents the DAG from being loaded. Pre-existing DAGs still worked properly, but for new DAGs I saw the error you mentioned.ĭebugging, I found in the scheduler logs:

Airflow dag bag upgrade#

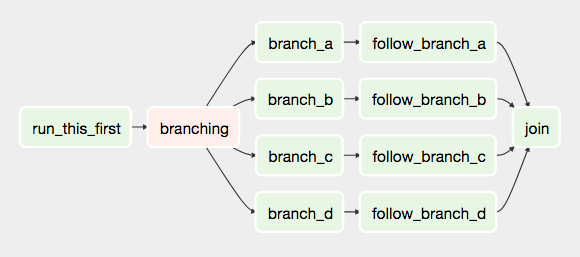

I have encountered the same problem after the upgrade to Airflow 2.4.1 (from 2.3.4). I have found no official references for this fix so use it carefully and backup your db first :) I think theres no other solution than to reset the db. The new dag is shown at Airflow UI and it can be activated. env file that ensures that the setup is the same on the main machine and the worker machines.Īirflow version: 2.4.0 (same error in 2.4.1) I'm running the setup on each machine using docker compose conf and shared. Two isolated airflow main instances(dev,prod) with CeleryExecutor and each of these instances have 10 worker machines. It's also weird, that I use the same airflow image in both of my instances and still the other instance has the newly added Datasets menu on top bar and the other instance doesn't have it. The error message appears when I click the dag from the main view.ĭeleting db is not the solution I want to use in the future, is there any other way this can be fixed? On the other airflow Instance, every dag was outputting this error and the only way out of this mess was to delete the db and init it again. One of my airflow instanced seemed to work well for the old dags, but when I add new dags I get the error. Scheduler log shows ERROR - DAG not found in serialized_dag table and I started to get these errors on the UI DAG seems to be missing from DagBag. collect_dags_from_db ( self ) ¶Ĭollects DAGs from database.I updated my Airflow setup from 2.3.3 to 2.4.0. Un-anchored regexes, not shell-like glob patterns. Ignoring files that match any of the regex patterns specified The directory, it will behave much like a. airflowignore file is found while processing Imports them and adds them to the dagbag collection. Given a file path or a folder, this method looks for python modules, Throws AirflowDagCycleException if a cycle is detected in this dag or its subdags collect_dags ( self, dag_folder=None, only_if_updated=True, include_examples=conf.getboolean('core', 'LOAD_EXAMPLES'), safe_mode=conf.getboolean('core', 'DAG_DISCOVERY_SAFE_MODE') ) ¶ Session ( ) – DB session.īag_dag ( self, dag, parent_dag, root_dag ) ¶Īdds the DAG into the bag, recurses into sub dags. Zombies ( _processing.SimpleTaskInstance) – zombie task instances to kill. Had a heartbeat for too long, in the current DagBag. kill_zombies ( self, zombies, session=None ) ¶įail given zombie tasks, which are tasks that haven’t The module and look for dag objects within it.

Airflow dag bag zip#

Given a path to a python module or zip file, this method imports Process_file ( self, filepath, only_if_updated=True, safe_mode=True ) ¶ Gets the DAG out of the dictionary, and refreshes it if expired Parametersįrom_file_only ( bool) – returns a DAG loaded from file. The amount of dags contained in this dagbag get_dag ( self, dag_id, from_file_only=False ) ¶ If False DAGs are read from python files.ĬYCLE_NEW = 0 ¶ CYCLE_IN_PROGRESS = 1 ¶ CYCLE_DONE = 2 ¶ DAGBAG_IMPORT_TIMEOUT ¶ UNIT_TEST_MODE ¶ SCHEDULER_ZOMBIE_TASK_THRESHOLD ¶ dag_ids ¶ size ( self ) ¶ Returns Store_serialized_dags ( bool) – Read DAGs from DB if store_serialized_dags is True. Therefore only once per DagBag is a file logged

This is to prevent overloading the user with logging Has_logged – an instance boolean that gets flipped from False to True after aįile has been skipped. Include_examples ( bool) – whether to include the examples that ship Settings are now dagbag level so that one system can run multiple,ĭag_folder ( unicode) – the folder to scan to find DAGsĮxecutor – the executor to use when executing task instances This makes it easier to runĭistinct environments for say production and development, tests, or forĭifferent teams or security profiles.

Level configuration settings, like what database to use as a backend and DagBag ( dag_folder=None, executor=None, include_examples=conf.getboolean('core', 'LOAD_EXAMPLES'), safe_mode=conf.getboolean('core', 'DAG_DISCOVERY_SAFE_MODE'), store_serialized_dags=False ) ¶īases: _dag.BaseDagBag, _mixin.LoggingMixinĪ dagbag is a collection of dags, parsed out of a folder tree and has high

0 kommentar(er)

0 kommentar(er)